Hao Dong, Xin Xu, Lei Wang, and Fangling Pu.

Sensors, 18(2): 611, 2018.

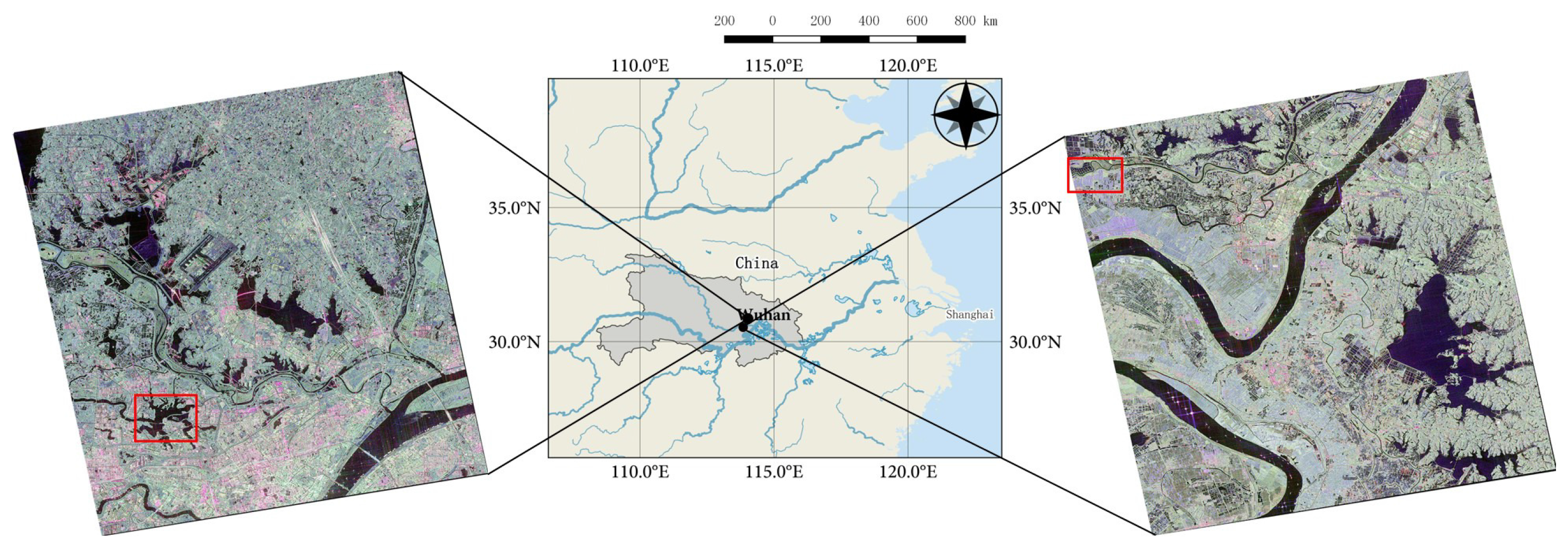

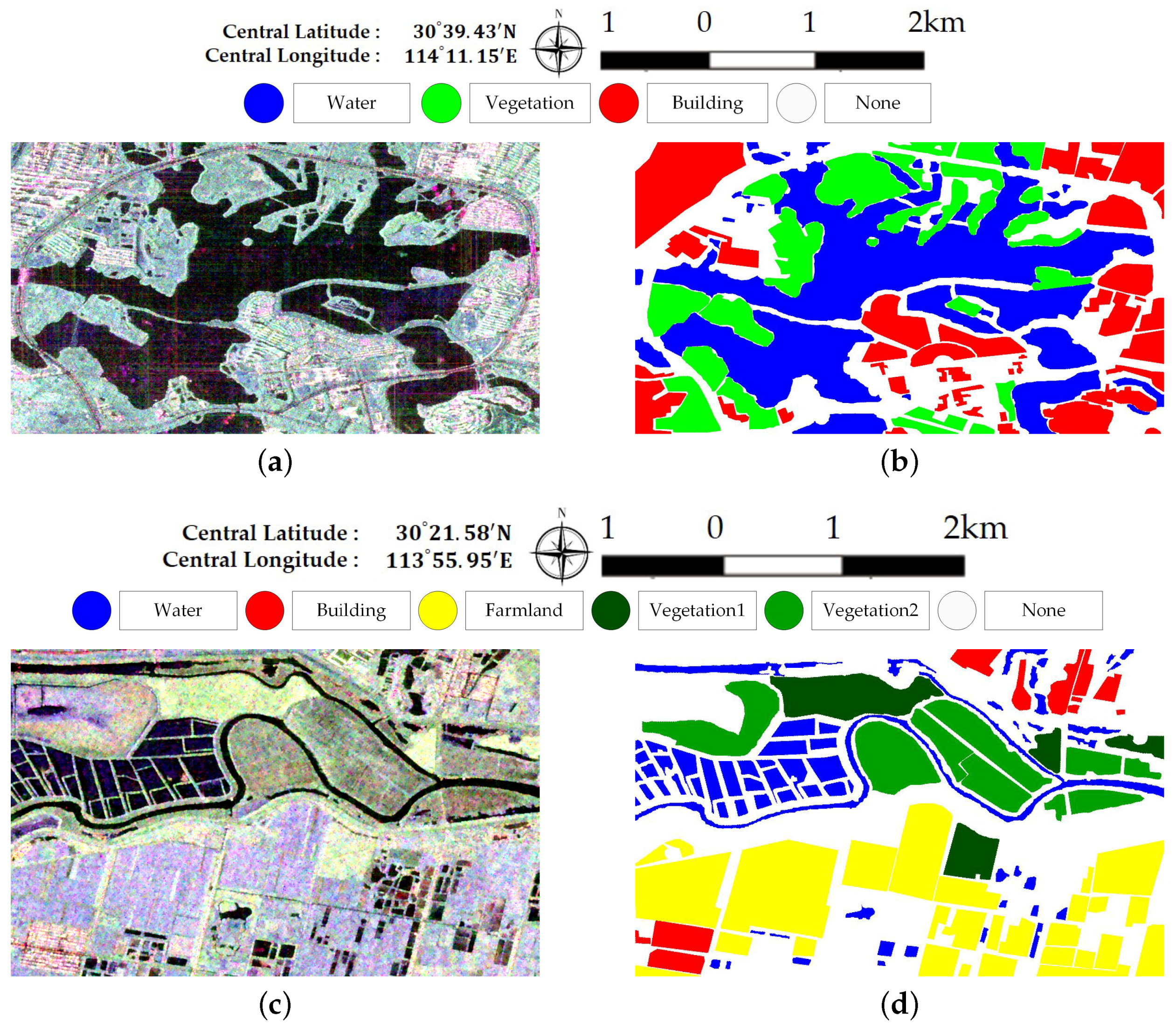

The launch of the Chinese Gaofen-3 (GF-3) satellite will provide enough synthetic aperture radar (SAR) images with different imaging modes for land cover classification and other potential usages in the next few years. This paper aims to propose an efficient and practical classification framework for a GF-3 polarimetric SAR (PolSAR) image. The proposed classification framework consists of four simple parts including polarimetric feature extraction and stacking, the initial classification via XGBoost, superpixels generation by statistical region merging (SRM) based on Pauli RGB image, and a post-processing step to determine the label of a superpixel by modified majority voting. Fast initial classification via XGBoost and the incorporation of spatial information via a post-processing step through superpixel-based modified majority voting would potentially make the method efficient in practical use. Preliminary experimental results on real GF-3 PolSAR images and the AIRSAR Flevoland data set validate the efficacy and efficiency of the proposed classification framework. The results demonstrate that the quality of GF-3 PolSAR data is adequate enough for classification purpose. The results also show that the incorporation of spatial information is important for overall performance improvement.

Hao Dong, Xin Xu, Haigang Sui, Feng Xu, and Junyi Liu.

IEEE Transactions on Geoscience and Remote Sensing, 55(10): 5777-5789, 2017.

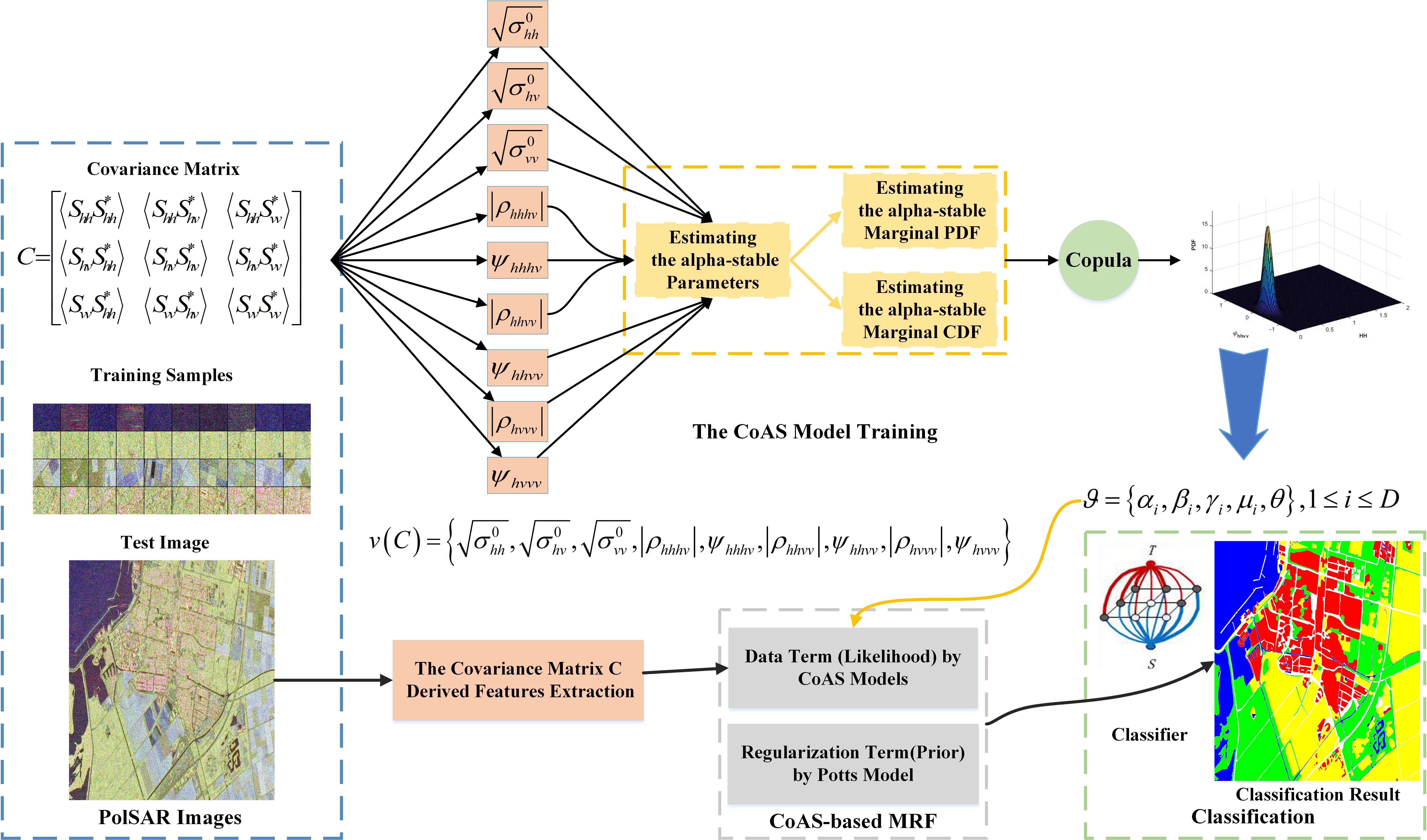

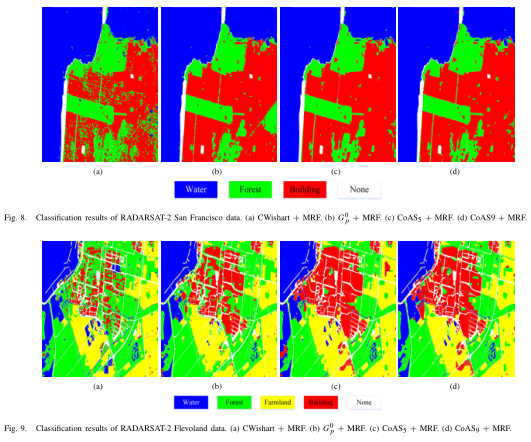

Polarimetric features are essential to polarimetric synthetic aperture radar (PolSAR) image classification for their better physical understanding of terrain targets. The designed classifiers often achieve better performance via feature combination. However, the simply combination of polarimetric features cannot fully represent the information in PolSAR data, and the statistics of polarimetric features are not extensively studied. In this paper, we propose a joint statistical model for polarimetric features derived from the covariance matrix. The model is based on copula for multivariate distribution modeling and alpha-stable distribution for marginal probability density function estimations. We denote such model by CoAS. The proposed model has several advantages. First, the model is designed for real-valued polarimetric features, which avoids the complex matrix operations associated with the covariance and coherency matrices. Second, these features consist of amplitudes, correlation magnitudes, and phase differences between polarization channels. They efficiently encode information in PolSAR data, which lends itself to interpretability of results in the PolSAR context. Third, the CoAS model takes advantage of both copula and the alpha-stable distribution, which makes it general and flexible to construct the joint statistical model accounting for dependence between features. Finally, a supervised Markovian classification scheme based on the proposed CoAS model is presented. The classification results on several PolSAR data sets validate the efficacy of CoAS in PolSAR image modeling and classification. The proposed CoAS-based classifiers yield superior performance, especially in building areas. The overall accuracies are higher by 5%-10%, compared with other benchmark statistical model-based classification techniques.

Rong Gui, Xin Xu, Hao Dong, and Fangling Pu.

Remote Sensing, 8(9), 708, 2016.

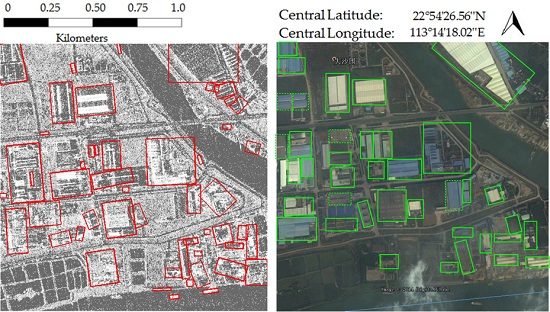

Accurate building information plays a crucial role for urban planning, human settlements and environmental management. Synthetic aperture radar (SAR) images, which deliver images with metric resolution, allow for analyzing and extracting detailed information on urban areas. In this paper, we consider the problem of extracting individual buildings from SAR images based on domain ontology. By analyzing a building scattering model with different orientations and structures, the building ontology model is set up to express multiple characteristics of individual buildings. Under this semantic expression framework, an object-based SAR image segmentation method is adopted to provide homogeneous image objects, and three categories of image object features are extracted. Semantic rules are implemented by organizing image object features, and the individual building objects expression based on an ontological semantic description is formed. Finally, the building primitives are used to detect buildings among the available image objects. Experiments on TerraSAR-X images of Foshan city, China, with a spatial resolution of 1.25 m × 1.25 m, have shown the total extraction rates are above 84%. The results indicate the ontological semantic method can exactly extract flat-roof and gable-roof buildings larger than 250 pixels with different orientations

Hao Dong, Xin Xu, Rong Gui, Chao Song, and Haigang Sui.

Geoscience and Remote Sensing Symposium(IGARSS), IEEE 2016 International,

2016, Beijing, China, 4742-4745, 2016.

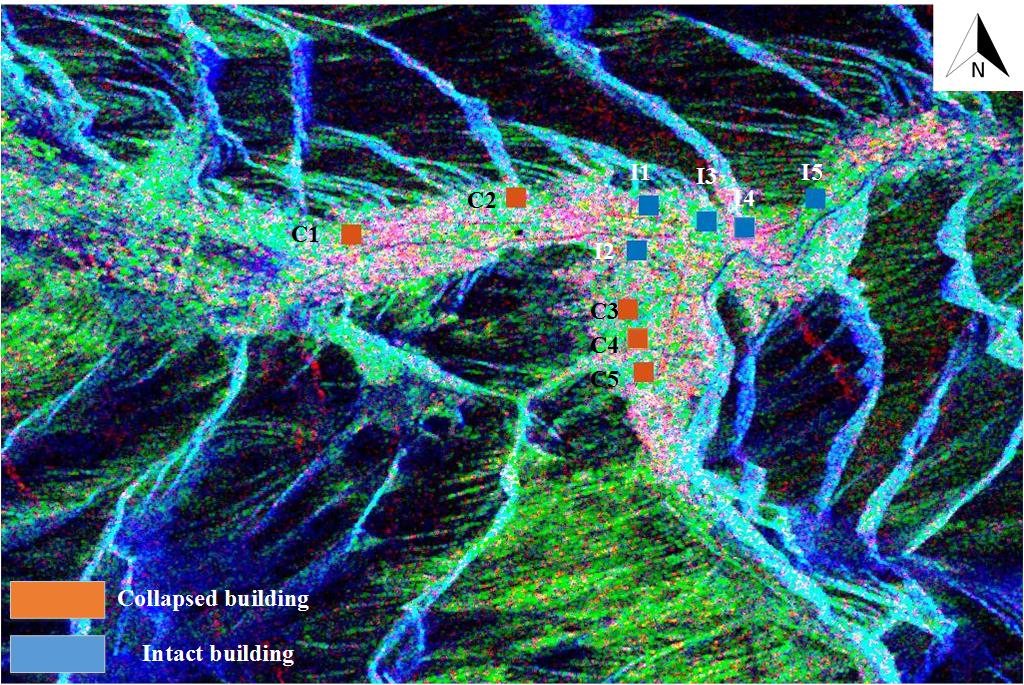

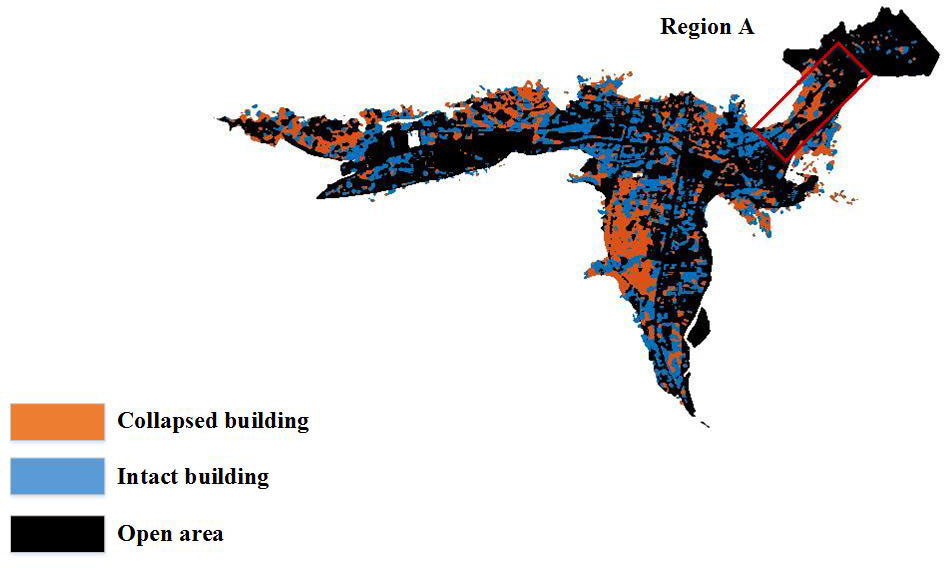

In this paper we proposed a metric learning-based method to extract collapsed buildings from post-earthquake PolSAR imagery. In this method, eight building and orientation related features, including entropy H, the average scattering angle α, anisotropy A, the circular polarization correlation coefficient ρ and the four scattering powers of Yamaguchi 4 component decomposition with a rotation of the coherency matrix, are considered and analyzed. Then a transformation matrix is learned from collapsed and intact building samples via an improved informational-theoretic metric learning(ITML). With such a transformation matrix, the features are projected into a low-dimension space to mitigate the impact of topography and building’s aspect angle. Finally a k−NN classifier is utilized to distinguish collapsed and intact buildings. The proposed method is tested on one RadarSAT-2 PolSAR image acquired after 2010 Yushu Earthquake in the Qinghai Province of China. Results are validated by the manually interpretation map of a very high resolution (VHR) optical image. It shows that, the method is efficient to extract collapsed building areas using limited samples and only one post-earthquake PolSAR image.